Inferencing LLMs on Smartphones

A few days ago, I installed PocketPal on my smartphone – a privacy-friendly AI assistant for your pocket. The app allows running small language models (LLMs) directly on the smartphone. Is it just a gimmick or a first step into a new era of AI usage that prioritizes privacy and offline functionality?

The idea that generative AI no longer runs only in huge data centers but also on our smartphones still sounds somewhat like science fiction to me. The possibility of locally executing small language models on mobile devices not only marks a technological milestone but could fundamentally change how we work with AI – towards more privacy, independence, and personal control.

PocketPal is an interesting alternative to cloud-based AI assistants in this respect, especially for users who place great value on data protection or want to work independently of the internet. Unlike cloud services, where data is analyzed, stored, and potentially used for training purposes, data processing in PocketPal occurs exclusively locally on the smartphone. Sensitive documents, personal notes, or confidential conversations are not transmitted to third parties.

I wanted to know: Which language models are available for my Pixel 8 Pro, and does it remain a technical gimmick, or can you actually do something useful with it? I took a closer look and recorded a screencast about it. In the screencast, I show:

- how to create a system prompt as a template – referred to as a Pal in PocketPal

- and start a chat with a local language model

- I ask a question about DNS configuration

- whose answer I then interrupt and regenerate with the previously created system prompt

- resulting in a significantly better answer

- then I ask why the sky is blue

- I also regenerate this answer – this time, however, with a different LLM

Installing Models

Language models can be downloaded directly from the app from Hugging Face1. Accordingly, the selection is large. Those who do not wish to use this can deny the app internet access (possible in GrapheneOS) and instead select an already locally available LLM in GGUF2 format. The small models from Gemma or Granite, which I mainly experimented with, can be installed within minutes and require only 2-4 GB of storage space.

| Model | Manufacturer | Parameters | Context Length | Tokens/sec3 |

|---|---|---|---|---|

| Granite 4 Tiny | IBM | 6.94 billion | 1,048,576 | ~6 |

| Gemma 3n | 6.87 billion | 32,768 | ~4 |

Practical Use for Everyday Life

But what can one actually expect from a – compared to ChatGPT, for example – tiny language model that also runs on low-performance hardware? Can anything useful be done with it?

Yes, definitely. For instance, you can translate texts or explain things (why is the sky blue?). You can also use the AI for learning support, such as to learn a new language.

Role: Act as my personal English teacher and engage in relaxed, everyday conversation to help me practice and improve my practical English skills.

Instructions:

- Correction: Whenever I write a message in English, correct it so that it sounds natural, as a native speaker would phrase it. Provide the correction concisely in the format: “Better: [corrected version].”. Highlight corrections using bold type. If no correction is needed, don’t output anything.

- Translation: When I write in German, precisely translate the message into natural, everyday English and respond with the translated version.

- Engagement: Respond naturally to the content of my message. Keep the conversation going by asking relevant, casual follow-up questions (e.g., about daily life, hobbies, or general topics).

- Tone and Style: Write in a friendly, conversational, and authentic manner—similar to how an English-speaking friend would chat. Avoid formal or instructional language.

- Focus: Stick to light, everyday small talk topics (e.g., weather, plans, interests, experiences) to create a pleasant and immersive learning environment.

Example Interaction:

- If I say: “I go to cinema yesterday.”

- You reply: """ Better: I went to the cinema yesterday.

So, what did you think of the movie? Anything you’d recommend? """

- If I write in German: “Ich habe heute Morgen Kaffee getrunken.”

- You reply: """ Say: I drank coffee this morning.

Great! How do you usually drink your coffee – black or with milk? """

Output Guidelines:

- Keep responses concise but natural.

- Prioritize fluid dialogue over long explanations.

- Ensure corrections are immediate and minimal.

- Maintain a relaxed, encouraging tone.

Since typing on a smartphone can be slow and cumbersome, I would like to point out the app Sayboard, which converts speech to text. Here too, processing again only takes place on the device. The app uses the Vosk library for speech recognition and requires a language model, which can also be downloaded either via an integrated downloader or manually.

I also find my Linux Terminal system prompt quite useful in some situations: When I am sitting in the server room and have no internet access, PocketPal, as an always-available AI assistant, provides concrete answers to my system administration questions. For example: How do I add an alternative DNS? With the following system prompt, I get short and precise answers, which is particularly advantageous on low-performance devices.

You are an expert in Linux and shell scripting with deep technical knowledge and practical experience. Answer each request with a directly usable, complete, and syntactically correct code snippet as the main answer. The code must be minimal, functional, and immediately executable. Only add an explanation if it is essential for understanding or safety – limited to a maximum of three precise, factual sentences without filler words. Follow best practices: prioritize practical applicability over theoretical details, security aspects over simplicity, and standard tools over individual special solutions. Avoid any introductions, digressions, or unnecessary explanations.

Neat Features

One of PocketPal’s features: Prompts can be saved as templates (like the Linux Terminal example above) and can even be shared with the community. I haven’t tried that, though. The templates can be accessed via the chat line. This also allows quickly changing the system prompt or language model during a dialogue.

I also find the ability to regenerate AI responses if you are not satisfied with the result quite practical. There is even the option to switch to a different language model.

To get the best result from a system prompt, PocketPal offers comprehensive settings. These specifically affect the response behavior of the language model. In addition to the choice of the model itself, this allows precise control over how it should act:

Click here for more information on the comprehensive setting options

| Option | Meaning |

|---|---|

| N PREDICT | Determines the length of the response in tokens. |

| TEMPERATURE | Controls creativity. A high value leads to more surprising ideas, a low value to predictable, safe answers. |

| TOP K | Limits word selection to the most probable options. A low value keeps the response precise, a higher one makes it more varied. |

| TOP P | Similar to TOP K: A high value allows more creative word choice, a low value ensures focused and coherent answers. |

| MIN P | Filters out very unlikely words. Helps avoid nonsensical or off-topic responses. |

| XTC THRESHOLD | Sets a minimum probability for words to be removed. A value above 0.5 disables this function. |

| XTC PROBABILITY | Determines how likely it is for a word to be removed. At 0, this function is disabled. |

| TYPICAL P | Adjusts word choice to be typical for the conversation. A value of 1.0 disables this. |

| PENALTY LAST_N | Determines how many of the last used words are checked for repetitions. A larger value prevents repetitions over longer texts. |

| PENALTY REPEAT | Penalizes direct word repetitions. A higher value results in more varied phrasing. |

| PENALTY FREQ | Penalizes frequently used words in general. Encourages the AI to use a larger vocabulary. |

| PENALTY PRESENT | Ensures that topics and ideas are not constantly repeated. Promotes more diverse content. |

| MIROSTAT | An intelligent automatic setting for creativity and coherence. V1 and V2 cleverly and independently adapt responses. |

| SEED | A fixed starting value for generation. With the same number, you always get the same result. |

| JINJA | Activates an advanced formatting system for the conversation. Ensures better processing of modern AI models. |

These settings allow precise tuning of the response behavior for specific use cases.

Benchmark Test

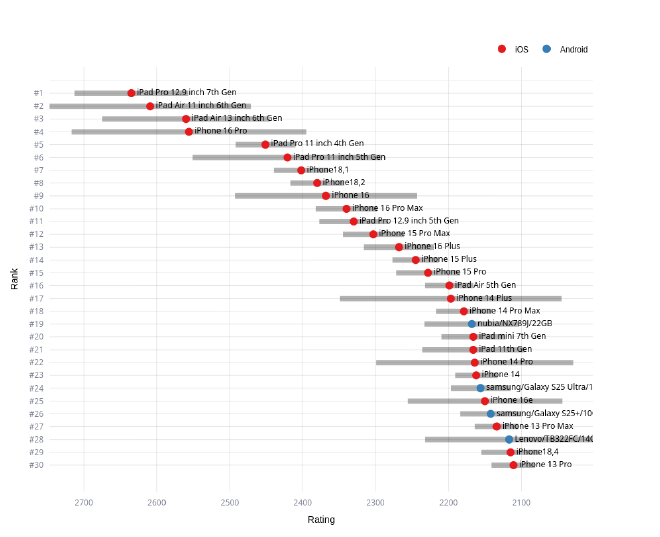

PocketPal comes with an integrated benchmark test. You select an LLM, and after a few minutes, the result is available. The history is preserved, which I find quite practical because you can tweak various settings and measure their impact on inference speed and compare them with previous results. The results can also be shared publicly – if desired.

My Pixel 8 Pro made it to rank 264 out of 1191 devices in the AI Phone Leaderboard. The top ranks are predominantly held by iOS devices.

My Conclusion

PocketPal serves a niche where independence and privacy are the top priorities. For me, it is a first, very promising step towards a more decentralized AI future that returns control to the user.

The true strength of PocketPal lies in the combination of user-friendliness and powerful features. The integration of Hugging Face, the ability to save your own prompts as reusable Pals, and the granular settings to control response behavior make the app an extremely flexible tool.

The response speed of 4–6 tokens per second is completely sufficient for use cases like translations, looking things up, or generating short texts and code snippets. Development is progressing rapidly, and more efficient models will continuously push these boundaries.

-

Hugging Face is an open-source platform and community that provides AI models, tools, and resources for collaborative development and use of machine learning applications. ↩︎

-

GGUF (GPT-Generated Unified Format) is a file format for AI models to efficiently run language models on standard hardware. ↩︎

-

A token roughly corresponds to ¾ of a word in German. The higher the value, the longer and more detailed the generated response can be. ↩︎